Debug Google Search Traffic Drops: Step-by-Step Guide

In September 2023, Google’s Search Central team published a comprehensive resource titled “Debug Google Search Traffic Drops”. The guide was created to help site owners, SEO specialists, and developers analyze situations where organic traffic from Google Search suddenly declines. Instead of leaving webmasters guessing, this official document outlines systematic steps to identify potential causes and determine whether a drop is normal, expected, or a sign that something is wrong.

(Source: Google Search Central Documentation)

Traffic drops can happen for many reasons — some natural, others technical. For instance, seasonality may explain fluctuations in user demand, while Google system updates or indexing issues may impact site visibility. This guide emphasizes that not all traffic loss is caused by penalties or algorithmic problems. In fact, many declines fall under “normal changes.”

By providing a structured workflow, Google’s documentation allows professionals to:

- Distinguish between natural, expected declines versus technical or content-related problems.

- Analyze traffic patterns across devices, regions, and time frames.

- Correlate changes in site performance with known Google updates.

- Apply debugging techniques using Search Console data, analytics insights, and structured reporting.

For SEO practitioners and businesses that rely on organic visibility, understanding these distinctions is critical. A sudden traffic drop may trigger panic, but with the right debugging process, most issues can be identified and addressed systematically.

This article explores Google’s official debugging framework in detail. Each part breaks down a specific element of the workflow, ensuring site owners have actionable steps for diagnosing and resolving traffic fluctuations.

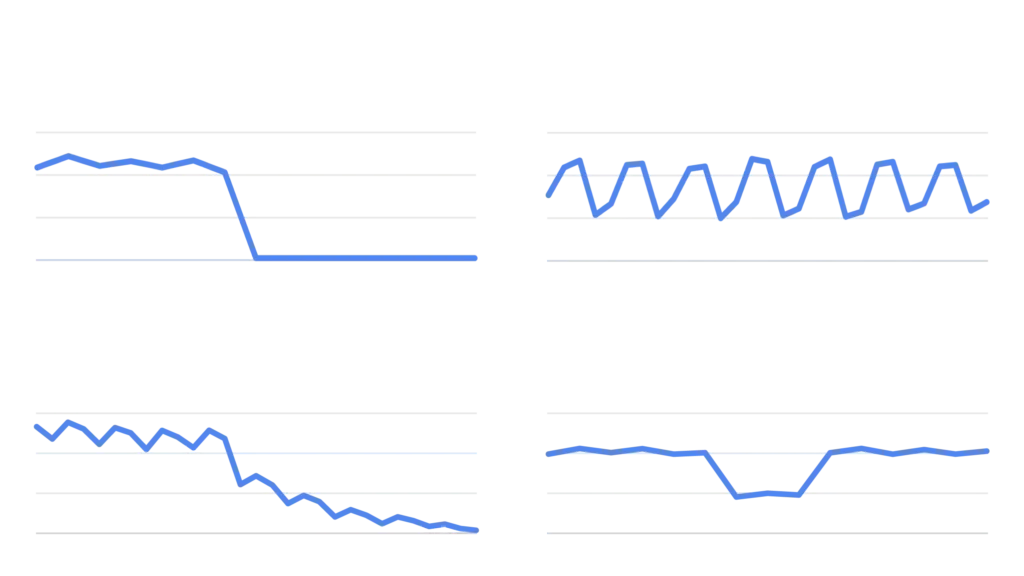

Types of Google Traffic Drops: Normal vs. Problematic

Google emphasizes that not all search traffic declines should be treated as red flags. Some are perfectly normal and reflect changes in user behavior or seasonal demand. Others, however, may indicate technical issues or content-related problems that require immediate attention. Understanding the difference is the first step in debugging.

Normal changes

Certain drops occur naturally and don’t require fixes. These may include:

- Seasonality in user behavior: For example, an eCommerce store selling school supplies will likely see reduced search interest after back-to-school season. Similarly, travel-related sites often see peaks during holiday periods and declines off-season.

- Industry-wide changes: A decline may reflect broader shifts in demand, such as fewer people searching for “office furniture” if more companies adopt remote work.

- Trending topics fading: If your site benefited from a viral topic or short-term news trend, traffic will naturally decline once public interest fades.

In these cases, the issue isn’t your website but the external environment. No optimization or fix is needed.

Problematic changes

Other drops signal potential issues that need investigation. These may include:

- Technical issues with crawling or indexing: If Googlebot can’t access your pages, or if important content is accidentally deindexed, visibility will drop sharply.

- Search appearance changes: Structured data or rich result eligibility might be lost, causing a decrease in impressions.

- Content relevance issues: Updates to Google’s ranking systems may reduce visibility for content that no longer aligns with search intent or quality guidelines.

- Manual actions or policy violations: If your site violates Google’s spam or quality policies, manual penalties may result in traffic loss.

By separating normal fluctuations from problematic ones, site owners can avoid wasted effort on issues that don’t exist. This classification also prevents misattributing natural demand changes to algorithm updates or penalties.

Step-by-Step Debugging Workflow: Starting with Search Console

The first step in understanding traffic drops is to verify data directly from Google Search Console (GSC). Google’s documentation stresses that Search Console is the most reliable source for search performance metrics since it reports actual data from Google Search itself.

(Source)

1. Confirm the timing of the drop

Open the Performance report in Search Console and adjust the date range to include both the current period and several weeks or months prior. Look for:

- Exact dates when impressions or clicks declined

- Whether the decline was sudden or gradual

- Any correlation with known Google updates (use Google’s Search Status Dashboard for cross-checking)

By identifying the precise timeline, you can begin matching the decline with potential causes such as system updates, site changes, or seasonal events.

2. Compare affected segments

Search Console allows filtering by device, country, and search appearance. Comparing these dimensions is crucial:

- Device type: Did the drop happen on mobile, desktop, or both?

- Geographic region: Was the decline limited to certain countries or global?

- Search appearance: Were rich results, featured snippets, or other enhancements lost during the drop?

Patterns across these filters often point toward the root cause. For example, a drop limited to mobile could indicate mobile usability or indexing issues.

3. Validate indexing status

Navigate to the Pages report under Indexing. Check whether key pages are still indexed or if errors appeared around the time of the decline. Look out for:

- Sudden spikes in “Excluded” or “Not indexed” pages

- Errors such as

Submitted URL marked ‘noindex’,Crawled – currently not indexed, orBlocked by robots.txt - Major shifts in the number of valid indexed pages

4. Cross-check site changes

If the timing of the drop coincides with a site migration, redesign, robots.txt update, or content changes, those actions should be reviewed. Search Console’s URL Inspection tool can help verify whether Google can access and index individual pages properly.

By using Search Console first, site owners can confirm whether the decline is linked to indexing, crawling, or search appearance issues. This structured approach prevents guesswork and allows narrowing down potential causes before deeper investigation.

Analyzing Traffic Patterns in Analytics and External Tools

While Search Console provides the most direct data about search performance, it is equally important to cross-check with Google Analytics (or other analytics platforms). Doing so helps confirm whether a drop is truly search-related or reflects a broader issue like reduced user demand or tracking errors.

(Source)

1. Validate traffic source attribution

In Google Analytics, segment traffic by Source/Medium to confirm that the decline is specifically from google / organic. Sometimes a drop may be mistaken for a search issue when in reality:

- Paid campaigns ended or shifted, causing total traffic to fall.

- Referral traffic decreased due to partnerships or backlinks disappearing.

- Direct traffic dropped because of branding or offline promotions ending.

2. Compare time periods

Look at year-over-year (YoY) and month-over-month (MoM) trends:

- Seasonal businesses (e.g., tourism, retail, education) often see predictable dips and spikes.

- Comparing current performance with the same period last year helps reveal whether a decline is unusual or expected.

3. Identify affected pages

Using the Landing Pages report in Analytics, identify which pages lost traffic. Combine this with Search Console’s Pages tab to see if both datasets confirm the same URLs are impacted.

- If only one section of the site is affected, the issue may be localized (e.g., category page templates, structured data errors).

- If the entire site is affected, broader factors like sitewide indexing, system updates, or technical errors may be the cause.

4. Segment by audience and geography

Analytics data also allows filtering by audience type (new vs. returning visitors) and geographic location:

- Drop in new visitors may suggest reduced visibility in search.

- Drop in returning visitors could signal user experience or loyalty issues.

- Geographic traffic shifts may indicate regional updates, regulatory changes, or even server-related latency.

5. Confirm tracking integrity

Always rule out issues with Analytics tracking code itself:

- Was tracking code accidentally removed during a site update?

- Are duplicate tracking IDs causing inflated or skewed numbers?

- Did you migrate to GA4 but misconfigure event/parameter settings?

By correlating Search Console with Analytics data, site owners gain a holistic picture. If both datasets align, the drop is genuine. If discrepancies exist, tracking problems may be the culprit.

Cross-Referencing with Google Updates and System Changes

Not every traffic drop is tied to your site alone. Sometimes, Google Search undergoes updates or experiences system issues that can affect site visibility. The debugging process should always include verifying whether external factors played a role.

(Source)

1. Use the Google Search Status Dashboard

Google maintains the Search Status Dashboard, which provides real-time and historical updates on:

- Ranking updates (core updates, helpful content updates, product reviews, spam updates)

- Crawling issues (temporary Googlebot disruptions)

- Indexing issues (bugs that may delay or prevent indexing)

- Serving issues (errors that impact how search results are displayed)

Check whether your traffic drop timeline aligns with one of these updates or reported issues.

2. Correlate update dates with Search Console data

If impressions and clicks fell on or around the date of a confirmed update, it’s possible the drop reflects changes in how Google evaluates content. For example:

- A core update may reprioritize certain signals like relevance and authority.

- A product reviews update may reduce visibility of thin review pages in favor of in-depth, user-first content.

3. Distinguish between temporary vs. long-term impacts

- System issues (like crawling or indexing bugs) are usually temporary and resolved once Google fixes the bug.

- Ranking system updates may have a longer-lasting impact and require content or site improvements to recover.

4. Avoid over-attribution

It’s important not to assume that every drop is tied to an update. Google stresses that site-specific issues, such as technical errors or content quality, are often more influential than external updates. Updates are one possible factor, not the automatic explanation.

By cross-referencing with Google’s official update logs and system reports, site owners can confirm whether their drop is part of a wider trend or unique to their website.

Investigating Technical Issues: Crawling, Indexing, and Rendering

Technical issues are among the most common causes of unexpected traffic declines. Even a small configuration mistake can prevent Googlebot from crawling or indexing critical pages, which directly impacts search visibility.

(Source)

1. Crawling issues

Crawling errors stop Googlebot from accessing your site. Check the following:

- Robots.txt misconfigurations: A single

Disallow: /line can block the entire site. - Server downtime: Temporary outages or slow server responses can limit Googlebot’s ability to crawl.

- Rate limiting: If your site restricts requests, Googlebot may not crawl enough pages to keep them fresh in the index.

➡️ Use Search Console’s Crawl Stats report to confirm whether crawl activity dropped around the same time as your traffic loss.

2. Indexing issues

Even if crawling succeeds, pages might fail to index. Look for:

- Sudden increases in “Excluded” pages in the Indexing > Pages report.

- Pages marked

noindexthat should be indexable. - Canonicalization errors, where Google chooses the wrong canonical version.

- Duplicate content across parameters or subdomains, which can dilute indexing signals.

➡️ Use the URL Inspection tool in Search Console to confirm index status for representative URLs.

3. Rendering issues

Google uses a headless Chromium renderer to process JavaScript. If your content relies heavily on client-side rendering, errors may cause incomplete or blank pages to be indexed. Check for:

- Blocked JS or CSS resources (verify with URL Inspection → Page resources).

- Lazy-loading without fallback (content never visible to crawlers).

- Dynamic rendering mismatches (different content served to users vs. crawlers).

4. Site migrations and structural changes

Traffic drops often follow big technical events:

- Domain migrations without proper 301 redirects.

- Switching to HTTPS but leaving mixed-content errors.

- Restructured URLs where internal links or sitemaps were not updated.

Technical missteps can cause large visibility losses, but they are also among the most fixable. By carefully auditing crawlability, indexability, and rendering, you can often identify root causes and recover search performance.

Content Quality, Relevance, and Search Intent Alignment

Beyond technical factors, a site’s content quality and relevance to user intent play a central role in search visibility. Google’s ranking systems continuously refine how they evaluate whether content meets users’ needs. If your traffic drops align with a core update or quality reassessment, content is often the primary factor.

(Source)

1. Relevance to search intent

Search intent evolves. A query that once favored long-form guides may now favor quick answers, videos, or transactional pages. If your content no longer matches intent, rankings may decline.

- Example: A broad article on “fitness tips” may lose ground to specialized results like “home workout routines” or “nutrition plans.”

- Solution: Reassess queries driving traffic and align content with current user intent.

2. Depth and originality of content

Thin or generic content struggles when Google raises the bar for usefulness. Signals include:

- Overly short posts that don’t answer the query fully.

- Articles rephrased from other sources without unique value.

- Pages cluttered with ads, making main content secondary.

➡️ Apply Google’s Helpful Content Guidelines to ensure originality, depth, and clarity.

3. Expertise, Authoritativeness, and Trust (E-E-A-T)

Content that demonstrates expertise and trust is more resilient to updates. Google recommends:

- Citing sources, especially in YMYL (Your Money or Your Life) topics.

- Using clear bylines and author bios.

- Updating outdated information regularly.

4. Content freshness

Search interest often favors updated material. If competitors refresh pages more often, your older content may lose visibility even if technically sound.

➡️ Refresh by updating data, expanding examples, and adding new subtopics.

5. User engagement signals

While Google doesn’t confirm direct use of metrics like bounce rate, user behavior provides indirect quality signals. High pogo-sticking (users clicking back immediately) can imply content mismatch.

Improving content quality requires a holistic approach: aligning with search intent, offering unique insights, and demonstrating authority. This is especially crucial when recovering from traffic losses tied to Google’s ranking updates.

Manual Actions and Policy Violations

While most traffic drops are due to algorithmic changes, seasonality, or site-level issues, Google also applies manual actions when sites violate its spam or quality policies. A manual action can result in partial or sitewide loss of visibility, and it requires corrective action before recovery is possible.

1. What is a manual action?

A manual action is when a human reviewer at Google determines that your site violates search policies. Common reasons include:

- Cloaking or deceptive redirects: Showing different content to users vs. crawlers.

- Spammy structured data: Marking up irrelevant or misleading content.

- User-generated spam: Unmoderated comment sections or forums filled with spam links.

- Link schemes: Buying or selling links to manipulate rankings.

- Thin, automatically generated, or scraped content designed to manipulate search.

2. How to detect manual actions

Manual actions are reported directly in Google Search Console:

- Go to Security & Manual Actions > Manual Actions.

- If no manual action is listed, your site is not under penalty.

- If a manual action is listed, it will specify the violation and scope (sitewide or partial).

3. Steps to resolve

- Review Google’s Search Essentials (formerly Webmaster Guidelines).

- Fix all instances of the violation (remove deceptive redirects, moderate spam, disavow or clean unnatural links, etc.).

- Submit a reconsideration request in Search Console explaining the fixes.

- Wait for Google’s response (manual reviews can take days or weeks).

4. Long-term prevention

Avoiding manual actions requires ongoing compliance with Google’s spam and quality policies. Proactive monitoring, moderation, and transparent practices help maintain trust and avoid penalties.

Manual actions are less common than algorithmic ranking changes, but their impact can be severe. Unlike natural fluctuations, recovery requires direct fixes and Google’s approval.

Competitor and Industry Trend Analysis

When debugging traffic declines, it’s important to determine whether the drop is isolated to your site or reflects a larger shift across your industry. Google’s guidance emphasizes the value of benchmarking against competitors and analyzing search interest trends.

(Source)

1. Use Google Trends

Google Trends provides insight into whether fewer people are searching for terms in your niche.

- If demand for a keyword has declined industry-wide, a drop in impressions is likely due to reduced search volume rather than site issues.

- For example, if “winter jackets” searches fall sharply in spring, all retailers in that niche will see less traffic.

2. Monitor competitor visibility

Third-party SEO tools (like SEMrush, Ahrefs, Sistrix) can help approximate how competitors are performing. While not official Google data, they can reveal whether similar drops occurred in competing domains.

- If competitors also lost traffic, an external factor like a Google update or demand shift may be at play.

- If competitors maintained or gained traffic, the issue may be specific to your site’s content, structure, or authority.

3. Compare SERP changes

Search results evolve constantly. A decline may stem from new SERP features that push traditional results lower. Examples include:

- More People Also Ask boxes.

- Featured snippets taking clicks.

- Shopping ads or product carousels dominating above-the-fold.

If competitors adapted to these changes (e.g., by optimizing for featured snippets or structured product data) while your site did not, visibility differences may explain the gap.

4. Use Search Console’s “Compare” feature

Within GSC’s Performance report, compare your site’s queries against different periods. Look for:

- Keywords lost to competitors

- Shifts in CTR due to SERP layout changes

- Device-specific differences if competitors have more mobile-friendly experiences

Competitor and industry benchmarking ensures you don’t misinterpret industry-wide changes as site-specific problems. This context helps focus your debugging efforts where they matter most.

Evaluating Site Changes, Migrations, and Updates

When debugging traffic drops, one of the most overlooked causes is recent changes made to the site itself. Google emphasizes that many declines align with site migrations, redesigns, or large-scale content updates. These internal changes can unintentionally disrupt crawling, indexing, or ranking signals.

(Source)

1. Check for site migrations

Traffic drops are common after:

- Moving from HTTP to HTTPS without setting proper redirects.

- Changing domain names or merging multiple domains.

- Restructuring URL paths without updating internal links and sitemaps.

➡️ Always confirm that 301 redirects are properly implemented and that canonical tags point to the new URLs.

2. Redesigns and template changes

Visual redesigns can inadvertently break SEO-critical elements, such as:

- Removing or altering internal links.

- Accidentally blocking JS/CSS resources.

- Changing

<title>tags, headings, or meta descriptions.

➡️ Use Search Console’s Page resources and Lighthouse audits to validate accessibility.

3. Content updates or deletions

When large portions of content are updated or removed, Google’s understanding of site relevance may shift. Examples:

- Consolidating articles but failing to redirect the old URLs.

- Removing thin content without creating replacements.

- Changing keyword targeting drastically.

4. Structured data changes

If you previously qualified for rich results but schema markup was removed or altered, impressions may decline. This can look like a ranking drop but is actually a change in search appearance.

5. Server and hosting modifications

Even changes at the infrastructure level matter:

- Migrating to a new hosting provider can cause downtime.

- Incorrect caching/CDN settings may serve outdated or inconsistent versions to crawlers.

When investigating traffic declines, always correlate the drop with your site change logs, CMS version updates, or developer releases. If a decline aligns exactly with a deployment, the issue is often internal.

Google’s Recommended Debugging Workflow

After analyzing possible causes — from technical issues and content quality to competitor trends and site changes — Google recommends a structured debugging workflow. This process ensures site owners don’t jump to conclusions but instead methodically isolate the root cause.

(Source)

Step 1: Identify the drop

- Use Search Console Performance report to pinpoint when the decline started.

- Segment by device, country, and search appearance to identify where the drop occurred.

Step 2: Rule out normal changes

- Cross-check with Google Trends to see if search demand declined.

- Compare with competitor visibility to confirm if the drop is industry-wide.

- Consider seasonality or short-lived interest trends.

Step 3: Check for external factors

- Review the Search Status Dashboard for known Google updates or issues.

- Align the drop timeline with ranking system updates (e.g., core, product reviews, spam).

Step 4: Investigate technical health

- Inspect crawl stats, indexing errors, and rendering issues in Search Console.

- Audit robots.txt, sitemaps, canonical tags, and redirects.

- Validate that important pages remain accessible and indexable.

Step 5: Assess content quality and relevance

- Revisit Google’s Helpful Content Guidelines.

- Ensure content matches evolving search intent.

- Demonstrate expertise and update stale information.

Step 6: Check for manual actions or policy violations

- In Search Console, review Manual Actions.

- If a penalty exists, fix violations and file a reconsideration request.

Step 7: Review site changes

- Cross-reference traffic drop dates with site migrations, redesigns, or deployments.

- Confirm redirects, structured data, and internal linking remain intact.

Step 8: Monitor recovery or continued decline

- After fixes, use Search Console and Analytics to monitor whether visibility stabilizes.

- Recovery from technical fixes may be quick, but quality-related drops often require weeks or months as Google reprocesses updated content.

This systematic approach minimizes panic and wasted effort. Instead of treating every drop as a penalty or algorithm issue, site owners can methodically eliminate possibilities until the true cause emerges.

Conclusion & Key Takeaways

Google’s official documentation on debugging traffic drops makes one point clear: not every decline is a disaster. Drops in search performance can arise from natural changes in user demand, industry seasonality, or SERP design — all of which may not require fixes. The key is to follow a structured workflow that separates normal fluctuations from genuine issues.

Key takeaways for site owners and SEO professionals:

- Start with Search Console

- Pinpoint when and where the drop occurred.

- Segment by device, geography, and search appearance.

- Rule out normal shifts

- Use Google Trends to verify demand.

- Compare year-over-year data to account for seasonality.

- Check for external influences

- Consult the Search Status Dashboard for updates or issues.

- Distinguish between temporary bugs and long-term ranking updates.

- Audit your site’s technical health

- Confirm crawlability, indexability, and rendering.

- Ensure redirects, canonicals, and robots.txt are correctly configured.

- Evaluate content quality and intent alignment

- Ensure depth, originality, and freshness.

- Demonstrate expertise and trustworthiness (E-E-A-T).

- Look for manual actions or policy violations

- If penalties are listed in Search Console, fix them and submit for reconsideration.

- Review internal changes

- Align drop timelines with migrations, redesigns, or content deletions.

- Fix broken internal links or schema markup issues.

- Monitor after fixes

- Technical fixes may recover quickly.

- Quality-related recovery often requires patience as Google reevaluates your content.

Final thoughts

Traffic drops can be alarming, but Google provides clear steps for diagnosing and resolving them. By combining Search Console insights, analytics comparisons, update monitoring, and content reviews, site owners can pinpoint the true cause without guesswork. The most effective approach is systematic: rule out normal changes, check for external influences, and then focus inward on technical, content, and compliance factors.

For SEO specialists, adopting this structured debugging process not only ensures smoother recoveries but also builds long-term resilience against future fluctuations.

FAQ

The first step is to check Google Search Console’s Performance report to identify when the drop started and which pages, devices, or regions are affected.

No. Many drops are normal, such as seasonal demand changes, industry trends, or fading interest in a topic. Google emphasizes that not all declines require fixes.

heck the Google Search Status Dashboard. If your drop coincides with a confirmed update, it may be related. Otherwise, investigate technical or content issues on your site.

Yes. Robots.txt misconfigurations, noindex tags, broken redirects, or server downtime can prevent Googlebot from crawling and indexing, leading to major drops.

Technical fixes may recover quickly once Google reprocesses pages. Content quality improvements often take longer — sometimes weeks or months — as Google reassesses the site.

Author

Harshit Kumar is an AI SEO Specialist with over 7 years of experience helping businesses navigate Google Search updates, technical SEO challenges, and advanced AI-driven optimization. He specializes in diagnosing ranking drops, implementing proven recovery strategies, and building future-ready SEO frameworks.

Well-written and concise. Great job.

thanks