Resolving the KeyError: ‘latestUpdate’ in Google Indexing API

The Google Indexing API is a useful tool for submitting URLs for immediate indexing, particularly for time-sensitive content such as job postings or live events. However, when using the API, you may encounter the following error:

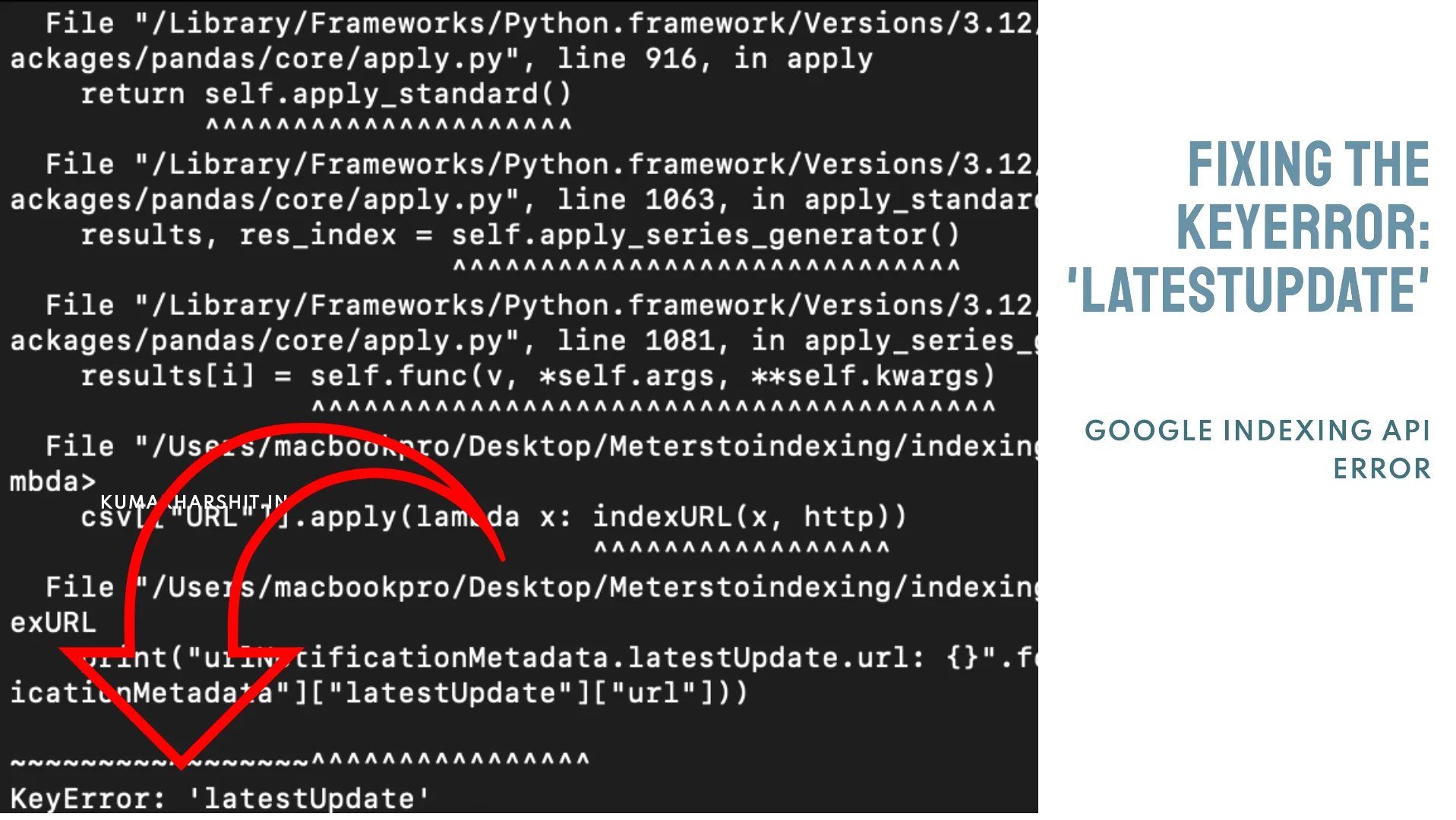

KeyError: 'latestUpdate'This error occurs when your script attempts to access the latestUpdate field in the response from Google’s Indexing API, but that field does not exist in the returned data. In this article, we will discuss why this happens, how to fix the script to handle such cases, and the steps to ensure that Google crawls and indexes your pages.

Understanding the Problem

When you use the Indexing API, the response typically contains a field called latestUpdate in the urlNotificationMetadata. This field provides information about the last time Google updated the URL in its index.

However, if the URL hasn’t been crawled or updated yet, the latestUpdate field will not be present in the API response. If your script tries to access this field without checking for its existence first, Python will throw a KeyError.

Causes of the KeyError: ‘latestUpdate’

- The URL has not been indexed yet: If Google hasn’t crawled the URL yet, the

latestUpdatefield won’t be present in the API response. - Crawling Delay: Even though the Indexing API accepts your URL, it may take some time before Googlebot crawls the page.

- API Usage Beyond Intended Content: The Indexing API is designed primarily for time-sensitive content. If you are submitting regular pages (like blog posts), Google may not prioritize them for immediate crawling.

Fixing the KeyError: ‘latestUpdate’

To resolve the error, you need to modify your script to check whether the latestUpdate field exists before trying to access it. This will prevent the KeyError from occurring.

Original Problematic Code:

print("urlNotificationMetadata.latestUpdate.url: {}".format(result["urlNotificationMetadata"]["latestUpdate"]["url"]))This line assumes that the latestUpdate field is always present, which isn’t true. Instead, we need to handle this case gracefully.

Corrected Code:

if "latestUpdate" in result["urlNotificationMetadata"]:

print("urlNotificationMetadata.latestUpdate.url: {}".format(result["urlNotificationMetadata"]["latestUpdate"]["url"]))

else:

print("No latestUpdate available for this URL.")Here, the script first checks if the latestUpdate field exists in the urlNotificationMetadata. If it doesn’t, it prints a message saying that the URL hasn’t been updated yet, instead of throwing a KeyError.

Full Script with Error Handling

Here’s the updated version of your script with proper error handling and additional debugging to print the entire API response:

from oauth2client.service_account import ServiceAccountCredentials

import httplib2

import json

import pandas as pd

# https://developers.google.com/search/apis/indexing-api/v3/prereqs#header_2

JSON_KEY_FILE = "kumarharshit.in.json"

SCOPES = ["https://www.googleapis.com/auth/indexing"]

credentials = ServiceAccountCredentials.from_json_keyfile_name(JSON_KEY_FILE, scopes=SCOPES)

http = credentials.authorize(httplib2.Http())

def indexURL(urls, http):

ENDPOINT = "https://indexing.googleapis.com/v3/urlNotifications:publish"

for u in urls:

content = {

'url': u.strip(),

'type': "URL_UPDATED"

}

json_ctn = json.dumps(content)

response, content = http.request(ENDPOINT, method="POST", body=json_ctn)

# Print response and content for debugging

print("HTTP Response: ", response) # Prints HTTP headers

result = json.loads(content.decode())

print("Full API Result: ", json.dumps(result, indent=4)) # Prints the entire API result

# Handle case where 'latestUpdate' is missing

if "error" in result:

print(f"Error({result['error']['code']} - {result['error']['status']}): {result['error']['message']}")

else:

print(f"urlNotificationMetadata.url: {result['urlNotificationMetadata']['url']}")

# Check if 'latestUpdate' exists

if "latestUpdate" in result["urlNotificationMetadata"]:

print(f"latestUpdate.url: {result['urlNotificationMetadata']['latestUpdate']['url']}")

print(f"latestUpdate.type: {result['urlNotificationMetadata']['latestUpdate']['type']}")

print(f"latestUpdate.notifyTime: {result['urlNotificationMetadata']['latestUpdate']['notifyTime']}")

else:

print("No latestUpdate available for this URL.")

# Loading data.csv and applying function

csv = pd.read_csv("data.csv")

csv["URL"].apply(lambda x: indexURL([x], http))How to Check if Your URL Is Indexed

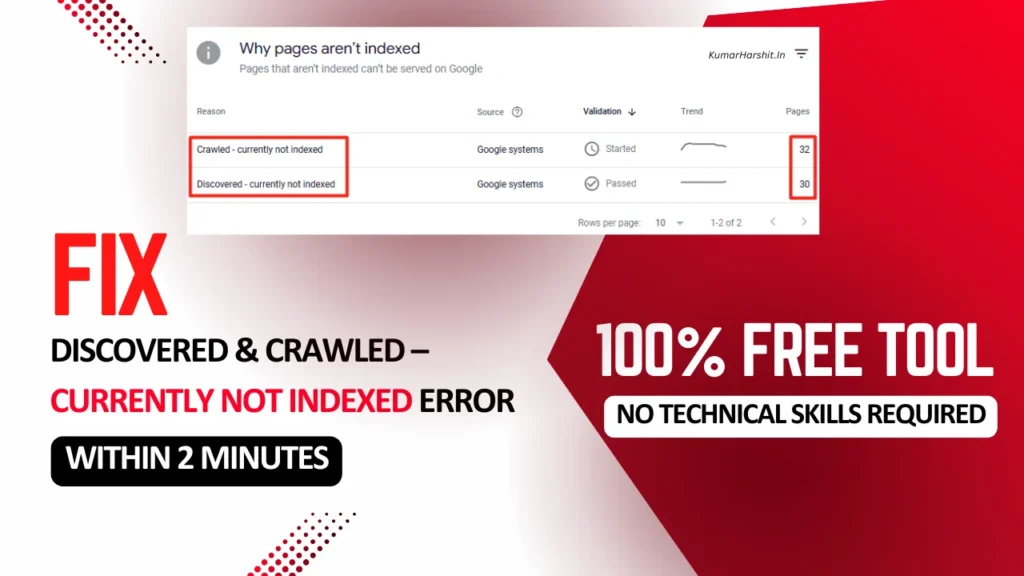

If you’re still not seeing the latestUpdate after submitting your URL through the Indexing API, you can manually check the indexing status of your URL using the Google Search Console URL Inspection Tool.

Steps:

- Open Google Search Console.

- Enter the URL in the URL Inspection Tool.

- If the URL is not indexed, you can click on “Request Indexing” to manually request crawling.

This can often speed up the process, especially if you’re working with non-time-sensitive content.

When This Solution Might Not Work

- Quota Issues: You might have exceeded your daily submission quota for the Indexing API (up to 200 URLs for eligible content per day). If you’ve reached the limit, wait until the next day to submit more URLs.

- Content Type: The Indexing API is designed for job postings and live events. Regular content like blog posts might not be indexed immediately, even after using the API.

- Site Issues: If your site has problems like robots.txt blocking Googlebot, noindex tags, or other server-side issues, your URL won’t be indexed. Use the URL Inspection Tool to check for these issues.

How to Recognize a Successful API Request

Once you run the Python script and submit a URL using the Google Indexing API, it is important to know how to confirm that the request was successful and whether your URL is being processed for indexing.

1. Check the HTTP Response Status Code

A status code of 200 indicates that the request was processed successfully. This means the URL was accepted by the Indexing API, and Google will attempt to crawl the URL soon.

2. Look for URL Submission in the API Response

If the URL is successfully processed, you should see the following in the response:

"urlNotificationMetadata.url: https://your-url.com"3. Handling ‘latestUpdate’ Availability

If your URL has been previously indexed, the latestUpdate field will contain information about the last time Google crawled the URL. If the URL hasn’t been indexed yet, this field will be missing.

- If you see a message like “No latestUpdate available for this URL”, it means the URL hasn’t been crawled yet, but it has been successfully submitted.

- Once the URL is crawled and indexed, you will be able to see the

latestUpdatefield populated with the latest information about the crawl.

Conclusion

The KeyError: 'latestUpdate' occurs when the Indexing API response does not include the latestUpdate field, which means that Google hasn’t crawled or updated the URL yet. By modifying your Python script to handle this error and manually checking the URL status in Google Search Console, you can avoid the error and better manage your URL submissions.

This solution will help you identify when a URL has been submitted but not yet crawled, allowing you to take further action if necessary.

FAQ

1. What is the Google Indexing API?

The Google Indexing API allows developers to programmatically notify Google of changes to web pages, helping to expedite the indexing of time-sensitive content.

2. What types of content are best suited for the Indexing API?

The Indexing API is primarily designed for time-sensitive content, such as job postings, live events, and similar updates.

3. What does the KeyError: ‘latestUpdate’ mean?

This error indicates that the latestUpdate field is missing from the API response, meaning that Google has not crawled or updated the URL yet.

4. How can I fix the KeyError in my script?

Modify your script to check for the existence of the latestUpdate field before accessing it, which will prevent the KeyError from occurring.

5. What should I do if my URL is not indexed after using the API?

You can manually check the indexing status of your URL using the Google Search Console URL Inspection Tool and request indexing if necessary.

6. How do I check if I have exceeded my API quota?

Monitor your API usage through the Google Cloud Console, where you can see your daily submission counts and quotas for the Indexing API.

7. Can I use the Indexing API for regular blog posts?

While you can submit regular blog posts, the Indexing API is not optimized for them and may not prioritize immediate indexing for such content.

8. What should I do if my site is blocking Googlebot?

Check your robots.txt file and ensure there are no rules preventing Googlebot from crawling your site.

9. How can I recognize a successful API request?

A successful request will return an HTTP status code of 200, indicating that Google has accepted your URL for crawling.

10. Is there a way to speed up indexing after submitting URLs?

Using the URL Inspection Tool in Google Search Console, you can manually request indexing for specific URLs, which may expedite the process.

About the Author:

Harshit Kumar is a dedicated SEO Specialist with extensive experience in addressing indexing challenges and enhancing website visibility. With a strong focus on technical SEO and a proven ability to deliver rapid results, Harshit has successfully assisted numerous websites in achieving high rankings on Google. If you’re encountering indexing problems or seeking to elevate your SEO strategies, Harshit is the trusted SEO Freelancer to guide you through all aspects of SEO.

Leave a Reply